What are available options for Log Management?

There are logs everywhere - systems, applications, users, devices, thermostats, refrigerators, microwaves - you name it.. and as your deployment grows, your complexity increases. When you need to analyze a situation or an outage, logs are your lifesaver.

There are tons of tools available - open-source, pay-per-use and few others.. Let's take a look at some of them here:

What are different tools/framework available to store these logs and analyze the logs - may be in real time, if not, after-the-fact analysis?

Splunk:

Splunk is a powerful log analysis software with choice of running in enterprise data center or over a cloud.

1. Splunk Enterprise: Search, monitor and analyze any machine data for powerful new insights.

2. Splunk Cloud: This provides Splunk enterprise and all it's feature in a SaaS way over the cloud.

3. Splunk Light: At a miniature scale of Splunk Enterprise - Log search and analysis for small IT environments

4. Hunk: Hunk provides the power to rapidly detect patterns and find anomalies across petabytes of raw data in Hadoop without the need to move or replicate data.

Apache Flume:

Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. It has a simple and flexible architecture based on streaming data flows. It is robust and fault tolerant with tunable reliability mechanisms and many failover and recovery mechanisms. It uses a simple extensible data model that allows for online analytic application.

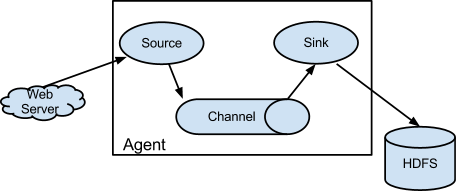

Flume deploys as one or more agents, that's contained within it's own instance of JVM (Java Virtual Machine). Agents has three components: sources, sinks, and channels. An agent must have at least one of each in order to run. Sources collect incoming data as events. Sinks write events out, and channels provide a queue to connect the source and sink. Flume allows Hadoop users ingest high-volume streaming data directly into HDFS for storage.

|

| credit: flume.apache.org |

Apache Kafka:

Apache Kafka is publish-subscribe messaging rethought as a distributed commit log. Kafka is fast, scalable, durable and distributed by design. This was a LinkedIn project at some point later open-sourced and now one of the top-level Apache open source project. There are many companies who has deployed Kafka in their infrastructure.

Kafka is a distributed, partitioned, replicated commit log service. It provides the functionality of a messaging system, but with a unique design.

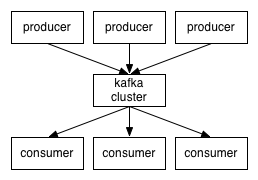

- Kafka maintains feeds of messages in categories called topics.

- We'll call processes that publish messages to a Kafka topic producers.

- We'll call processes that subscribe to topics and process the feed of published messages consumers..

- Kafka is run as a cluster comprised of one or more servers each of which is called a broker.

|

| credit: kafka.apache.org |

Kakfa has a good ecosystems surrounding the main product. With wide range of choice to select from, it might be a good "free" version of log management tool. For a large systems deployments, Kakfa can act as a broker with multiple publishers - may be from Syslog-ng (with agent running on each systems), FluentD (again, with fluentd agents running on nodes and plugin on Kakfa) may solve the purpose of log collections. With log4j appender, it might be extremely easy for applications which uses log4j framework, use it seamlessly. Once you have logs ingested via these subsystems, searching logs can be cumbersome. With Kafka, there are some alternatives where you can dump these data into HDFS and run a Hive query against it and voila, you get your analysis.

Still there is some work to be done in terms of how easily someone can retrieve it like via Kibana dashboard.

ELK:

When we are talking about logs, how can we not remember ELK stack. When I got introduced to ELK stack, it was presented as a Splunk alternative as open source. I agree, it does have the feature sets to complete against core splunk product and if there is a right sizing (think: small, medium) involved, we don't need Splunk at all and ELK stack might be good enough. Though in recent usage, we have found some scalability issues when we reach few hundred gigs of logs per day.

Though one good feature I like of ELK stack is all-in-one. I have my log aggregator, search indexer and dashboard within one suite of application.

With so many choices, it becomes difficult to rely on one or the other. If someone has enough money to spend Splunk might be the right choice but if someone can throw a developer at it, either ELK stack or Kafka - depends on the scale at which they are growing, might be better off.